Data Streaming Workshop

About This Program

Real-time data analysis is becoming more and more relevant in our era, coupled with the need for systems to respond quickly to different patterns in data. This interactive workshop will explore everything from the roots to some of the most advanced concepts of data streaming. Thus, participants will have the chance to strengthen their foundations and increase their understanding of these data flows. Architectures and key concepts for the construction of resilient pipelines that respond to the needs of the industry will be reviewed, in addition to many of the most popular technologies of the ecosystem.

Why You Should Apply

Data streaming used to be reserved for very select niches, like media streaming and stock exchange financial values. Today, it’s being adopted by many more companies across different industries. Data streams allow organizations to process data in real time, giving them the ability to monitor all aspects of their business while enabling users to react and respond to relevant events much more quickly than other data processing methods. In turn, knowledge of how to design and implement systems in real time generates a competitive advantage for companies and professionals.

What Will You Achieve?

By participating in this workshop, participants gain a better understanding of the data streaming ecosystem, explore some of the most used technologies, and perform some exercises that will illustrate key concepts of a streaming data pipeline in execution.

Our Training Model

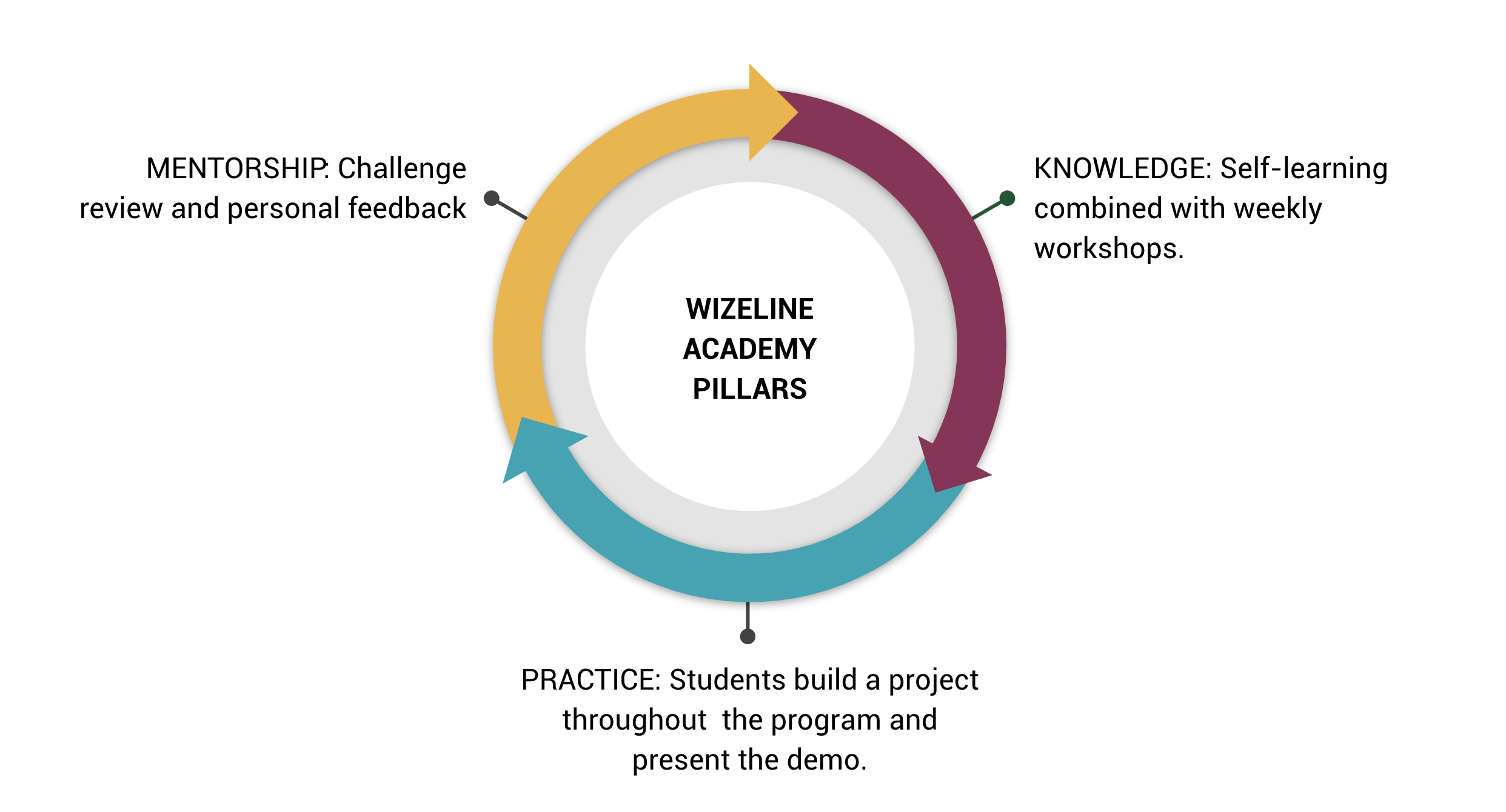

We believe in the power of learning by doing, along with the value of getting advice and support from professionals with experience in the industry. Our workshop model is built on three main pillars:

General Information and Dates

- Duration: 8hrs

- Time commitment:

- Live sessions: Four sessions, each lasting from 6 to 8 pm

- Monday, August 22

- Wednesday, August 24

- Friday, August 26

- Monday, August 29

- Self-paced learning: available 24/7

- Relevant dates

- Application Opening: Wednesday, August 3

- Application Closure: Wednesday, August 17

- Workshop Kickoff: Monday, August 22

- Workshop Closure: Monday, August 29

Who should take this course?

The target participant must have an experience level of intermediate or higher, since the topics to be discussed will have a certain degree of complexity. Participants are expected to have experience designing and implementing streaming ETL or, in the absence of this, extensive batch knowledge using data processing frameworks, as well as experience with Scala or Java. The ideal participant will be a proactive, critical, purpose-driven person with extensive analytical skills, ecosystem vision, and leadership skills.

Power Skills:

- Leadership

- Consulting mindset

- Proactivity

Technical Skills:

- Scala or Java

- Apache Spark

- Big data knowledge

Requirements

- Spark and Java 8 installed

- Experience developing with Python 3.7+

- Apache Spark knowledge is recommended

- Kafka knowledge is recommended

How Can You Participate?

- Read the candidate’s profile.

- Apply to the program by filling out the application form.

- We will notify you by email regarding your participation in the training.

Application Closed

We appreciate your interest, unfortunately the application period for this event has ended. For more information or if you have any questions, you can reach out to us via email: academy@wizeline.com

Lecturers

Luis Martínez

Data Engineer

Luis is a Data Engineer with over 5 years of experience working in the Banking industry, adopting startup philosophy as well as Scrum framework. He has experience leading teams of 5-10 engineers. He loves working with large amounts of data, and even more if it also involves building and deploying Python, Scala or Java software. He likes to research and discover innovative solutions, as well as they are optimal for the scenario. His career is oriented to working with Cloud, like AWS and GCP.

Raul García

Senior Data Engineer

With 11 years of experience in the IT industry, Raul has in-depth knowledge of Hadoop and Google Cloud Platform (GCP). Previously, he was a GCP Support Representative (on behalf of Google), working very closely with clients who were using the latest technologies. As a Data Engineer at Wizeline, he has expanded his experience in troubleshooting, designing, and implementing data solutions with BigQuery, GCS, Dataflow, and Cloud Composer.

Nicolás González

Senior Software Engineer

Nicolás is an accomplished, motivated, and versatile professional. He’s a Wizeline Senior Software Engineer with over seven years of experience in the telecommunications, retail, banking, and technology industries. Known for a personable approach to clients and providers as well for delivering operational excellence, he is innovative and driven, a consistent overachiever, an excellent coordinator and organizer, and a responsible and efficient PM.

Carlos López

Data Engineer

Carlos has over six years of experience in software development and data, primarily consultancy, logistics, and mobility. He has experience leading teams of 2-3 people. Carlos is most passionate about solving complex business problems that include collecting, interpreting, analyzing, and modeling data.

Luis Alfredo López Peñates

Data Engineer

Luis has over three years of relevant work experience as a data engineer, primarily in the financial services and retail industries. His career has been oriented around on-premises solutions for big data, but he has also worked with cloud solutions. He is most passionate about building reliable and scalable pipelines for data extraction and data analysis, using leading big data and cloud technologies and being helpful whenever he can.

Daniel Andrés Melguizo Velasquez

Data Engineer

Daniel is a data engineer with over 10 years of experience working with data for retail and financial services companies, primarily using big data and business intelligence technologies. He has participated in the development of data lakes, cubes, and warehouses. One of his biggest passions is solving the challenges that data offers. He is also interested in cloud technologies and data analytics services.

David Torres

Senior Software Engineer

David has more than five years of experience developing, testing, documenting, and deploying streaming applications. He has always been passionate about learning and developing new technologies proactively. He has had the opportunity to design and develop real-time applications using Confluent Kafka, helping customers to make decisions based on the newest data that their systems are generating and to generate real-time dashboards and ETL processes. He is always researching with the goal of learning new things and improving his skills.